Author: Erinne Paisley

Introduction

With the onset of the COVID-19 pandemic, classrooms around the world have moved online. Students from K-12, as well as University-level, are turning to their computers to stay connected to teachers and progress their education. This move online raises questions of the appropriateness of technologies in the classroom and how close to a utopian “Mr. Robot” we can, or should, get. One of the most contested technological uses in the classroom is the adoption of Artificial Intelligence (AI) to teach.

AI in Education

AI includes many practices that process information in a similar way to humans processing of information. Human intelligence is not one-dimensional and neither is AI, meaning AI includes many different techniques and addresses a multitude of tasks. Two of the main AI techniques that have been adapted into potential educational AI are: automation and adaptive learning. Automation means computers are pre-programmed to complete tasks without the input of a human. Adaptive learning indicates that these automated systems can adjust themselves based on use and become more personalized.

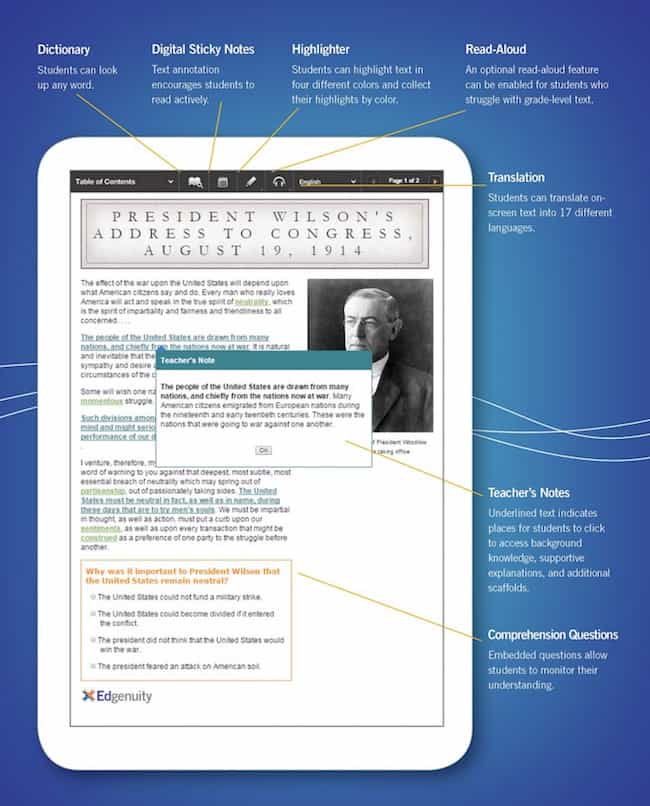

The potential of combining these AI techniques into some type of robot teacher, or “Mr. Robot” sounds like something out of a sci-fi cartoon but it is already a reality for some. A combination of these AI techniques have already been used to assessing students’ prior and ongoing learning levels, placing students in appropriate subject levels, scheduling classes, and individualizing instructions. In the Mississippi Department of Education, in the United States, a shortage of teachers has been addressed through the use of an AI-powered online learning program called “Edgenuity”. This program automates lesson plans following the format of: warm-up, instruction, summary, assignment, and quiz.

A screenshot from an Edgenuity lesson plan.

Despite how utopian an AI-powered classroom may sound, there are some significant issues of inequality and social injustice that are supported by these technologies. In September 2019, the United Nations Education, Scientific and Cultural Organization (UNESCO), along with a number of other organizations, hosted a conference titled: “Where Does Artificial Intelligence Fit in the Classroom?” that explored these issues. One of the main concerns raised was: algorithmic bias.

Algorithmic Bias in Education AI

The mainstream attitude towards AI is still one of faith – faith that these technologies are, as the name says, intelligent as well as objective. However, AI bias illustrates how these new technologies are very far from neutral. Joy Buolamwini explains how AI can be biased, explaining that the biases, conscious or subconscious, present in those who create the code is then a part of the digital systems themselves. This creates systems especially skewed against people of colour, women, and other minorities who are not statistically as included in the process of creating these codes, including AI codes. For instance, the latest AI application pool for Stanford University in the United States was 71% male.

Joy Buolamwini’s Tedx talk on algorithmic bias.

In the educational sector, people of colour, girls, and other minorities are already marginalized. Because of this, there is the concern that AI in the classroom that has encoded biases would further these inequalities. For instance, trapping low-income and minority students into low-achievement tracks. This would create a cycle of poverty, supported by this educational framework, instead of having human teachers address students on an individual level and offer specialized support and attention to those facing adversity.

However, the educational field already has its own biases embedded in it – both within individual teachers and throughout the system more generally. Viewed in this way, the increased use of AI in the classrooms creates the opportunity to create less bias if designed in a way that directly aims to address these issues. The work of designing AI that addresses and aims to create progressive technologies has been taken on by teachers, librarians, students or anyone in-between.

Learning to Fight Algorithmic Bias

By including more voices and perspectives in the process of creating the coding AI technologies, algorithmic bias can be prevented and, instead, a technological system that supports a socially just classroom can be supported. In this final section, I will highlight two pre-existing educational projects aimed at teaching students of all ages to identify and fight algorithmic bias while creating technology that creates a more equal classroom.

Algorithmic Bias Lesson Plans

The use of AI to create a more socially just educational system can start in the classroom, as Blakeley Payne showed when she ran a week-long ethics in AI course for 10 to 14-year-olds in 2019. The course included lessons on creating open-source coding, AI ethics, and ultimately taught students to both understand and fight against algorithmic bias. The lessons plans themselves are available for free online for any classroom to incorporate into their own lesson plans – even from home.

Students learn how to identify algorithm bias during the one-week course.

Blakeley Payne’s one-week program focuses on ages 10-14 to encourage students to become interested and passionate about issues of algorithm bias, and the STEM field more broadly, from a young age. Students work on simple activities such as writing an algorithm for the “best peanut butter and jelly sandwich” in order to practice questioning algorithmic bias. This activity in particular has them question what “best” means? Does it mean best looking? Best tasting? Who decides what this means and what are the implications of this?

Non-profits such as Girls Who Code are also actively working to design lesson plans and activities for young audiences that teach critical thinking and design when it comes to algorithms, including those creating AI. The organization runs after school clubs for girls in grades 3-12, as well as college programs for alumni of the program, as well as summer intensives. Their programs focus technically on developing coding skills but also have a large focus on diversifying the STEM fields and creating equitable coding.

Conclusion

The future of AI in the classroom is inevitable. This may not mean every teacher becomes robotic, but the use of AI and other technologies in the educational field is already happening. Although this raises concerns about algorithmic bias in the education system, it also creates more opportunities to re-think how technologies can be used to create a more socially just educational system. As we have seen through existing educational programs that teach algorithmic bias, even at the kindergarten age, interest in learning, questioning, and re-thinking algorithms can easily be nurtured. The answer to how we create more socially just educational system through AI is simple: just ask the students.

About the Author

Erinne Paisley is a current Research Media Masters student at the University of Amsterdam and completed her BA at the University of Toronto in Peace, Conflict and Justice & Book and Media Studies. She is the author of three books on social media activism for youth with Orca Book Publishing.